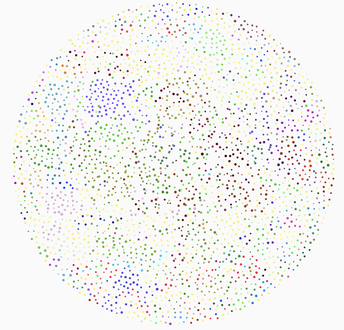

ASL-LEX: mapping the ASL lexicon

|

ASL-LEX is a lexical database that catalogues information about signs in American Sign Language (Caselli, Sevcikova Sehyr, Cohen-Goldberg, & Emmorey, 2016). It currently includes information about frequency (how often signs are used in everyday conversation), iconicity (how much signs look like what they mean), and phonology (which handshapes, locations, movements etc. are used). It can also be used by ASL researchers to develop experiments, or to develop technology. ASL teachers can use ASL-LEX to support vocabulary acquisition (e.g., to develop vocabulary lessons that prioritize commonly used signs). Students can also look up signs based on their sign form, without knowing a sign’s English translation, and begin to learn about linguistic patterns in the forms of signs. This project is supported by the National Science Foundation.

|

ASL Vocabulary Acquisition

|

Deaf children have variable access to language early in life: they often do not have signing role models, and cannot hear the sounds of spoken language. This puts them at risk of language deprivation. This line of research explores how deaf children learn vocabulary, and how these early experiences with language affect language learning. The goal is to identify how children learn signs, the factors that promote vocabulary acquisition, and to develop assessment tools for measuring early ASL vocabulary. This project is supported by the National Institute of Health.

|

Sign Language Computation

|

The vast majority of communication technologies are designed for written and spoken languages (e.g., Siri, ChatGPT), and exclude people who use sign languages. With advances in machine learning, computer vision, and human pose estimation, there is rapidly growing interest among computer scientists in sign language computation. My work explores the ethical landscape of this emerging field: Which sign languages technologies do signing communities want (and not want)? What concerns may emerge about privacy, fairness, and audism? What kinds of datasets support high quality sign recognition technology?

|